With a great number of AI research studies focusing on improving the accuracy of AI algorithms, far less attention has been paid to the energy costs involved. A collaboration between researchers at Virginia Tech, Ericsson, and the AI Cross-Center Center Unit at the Technology Innovation Institute (TII) in the United Arab Emirates recently explored ways to strike the right balance between accuracy, energy usage, and precision for distributed AI.

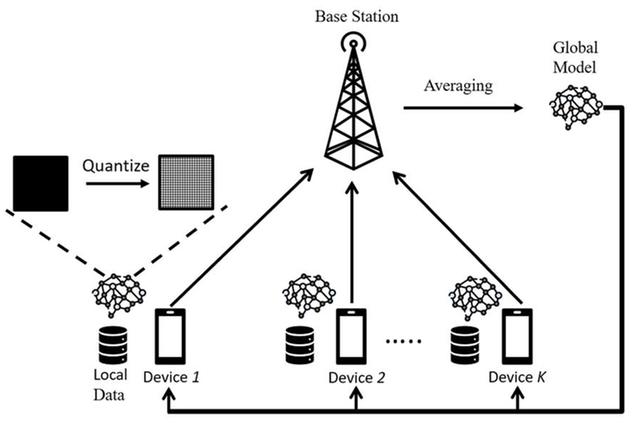

The team specifically focused on how quantization, a technique which creates a more efficient representation of the AI state, impacts the balance between accuracy, energy usage, and convergence time within a distributed AI system. The framework devised has potential to also be applied across other kinds of improvements.

The research won the best paper award in the Green Communication Systems and Networks Symposium category at IEEE International Conference on Communications (ICC) 2022 conference against thousands of entries. “Down the road, this research promises to improve the development of more energy efficient AI applications for home automation, autonomous robotics, and unmanned drones,” said Dr. Walid Saad, Professor in the Electrical and Computer Engineering department at Virginia Tech, who worked on the project.

By focusing on the energy tradeoffs for implementing federated machine learning techniques, the research offers an outlook on a promising new AI approach which allows multiple devices to collaborate and improve the algorithms for each other, while still preserving privacy. The technique was first pioneered by Google in 2017, which is being explored to allow cars, autonomous drones, and smartphones models to be trained by sending a representation of the AI model rather than the raw data itself.

However, these applications can incur tremendous energy overheads as they scale across a more extensive network of devices. “We wanted to understand how we could design green distributed AI without sacrificing accuracy,” explained Dr. Saad.

Different tradeoffs

There are several approaches to designing more efficient methods of implementing AI algorithms at scale, including quantization, sparsification, and knowledge distillation, explained Minsu Kim, Graduate Research Assistant at the Electrical and Computer Engineering department at Virginia Tech, who also contributed to the research.

With quantization, data scientists consider ways to use fewer bits to represent the state of AI models; Sparsification uses more efficient ways to represent the changes in the current state of neural networks; and knowledge distillation deploys larger computers to compress the best representations for other nodes. “These three approaches are the most fundamental techniques we are seeing to reduce energy consumption for neural networks right now,” Kim explained.

The team also analyzed other work that found ways to improve communication system performance, such as transmission power and bandwidth. Ultimately, the group decided to focus on the tradeoffs within different quantization approaches that show the most promise for improving energy efficiency for distributed AI running over wireless systems, such as 5G, in the short run. Quantization reduces the data size for describing machine learning model updates, which requires less energy to process and transmit. However, this can also impact the accuracy of models and the amount of time it takes a neural network to converge on an optimal solution.

The research uncovered an exciting insight related to the best way to express data – in computers, there has been a steady trend towards more precision for characterizing data from 16-bit to 32-bit, and now 64-bit architectures; however, the team found that, at least for AI applications, more bits mean more energy. Kim said representing data using 32-bits can take two to three times as much data as using 16-bits.

With that, the researchers developed a new theoretical model that could determine the optimal number of bits for representing data to minimize energy consumption to achieve a given level of accuracy – this could help balance energy, accuracy, and the neural network convergence time. Theoretically at least, the researchers found ways to reduce energy usage by half. In practice, this will likely translate into a 10-20% improvement for practical applications, noted Dr. Saad.

Spurring new research in decentralized AI

The research is set to help improve the energy usage required to run AI-powered apps on smartphones, cars, and autonomous drones, in which Dr. Saad added, “This could help extend the life of a smartphone or autonomous car, so they don’t consume too much energy.”

This brings up the need for researchers to consider all aspects of new AI architecture design with ‘wireless’ factored into the equation. Researchers must consider how each design aspect may reduce accuracy to acceptable levels, increasing overall energy efficiency. “This provides the first step towards understanding how to control architectures across different devices and still balance energy, accuracy, and convergence time,” said Dr. Saad.

In the long run, he expects future research to explore the tradeoffs around improving privacy with these other factors. This could immediately benefit existing federated learning applications, such as Google’s Gboard application, which uses federated learning to improve its AI-powered keyboard.

Ultimately, it may take another 5-10 years for these ideas to be adopted for more safety-critical use cases like self-driving cars. “This is a first step towards understanding the tradeoffs and how to reduce the architecture of federated learning. This is a crucial step for how a researcher might examine the green impact of AI in distributed learning over wireless systems, and how that interplays with accuracy and convergence,” Dr. Saad added.